Pt2: Adding a DVWP Form to the page

Pt3: Update the DVWP Form using a Drop-Down

Pt4: Trimming Drop Down List results using CAML

Part 5: Force Selection within Drop Down List

Pt6: Bonus: Embed documents to page

If this post doesn’t make any sense, please read over the first post in this series. I’m going to keep building on each post and then tying them together at the end.

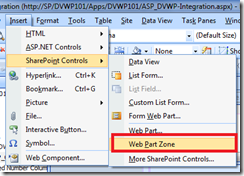

Adding the Web Part Zone

I always add a Web Part Zone to every custom page that I create. Adding these zones allow you to easily modify the page by clicking on Site Actions > Edit Page. Let’s first click in the correct area of the table, so we’ll get the placement of this zone just right. If you remember, we had a table with 2 columns and 2 rows. The first cell in this table is where we added the data source and the ASP.net control. Directly below this, let’s add this zone. Refer to this screenshot if this sounds confusing.

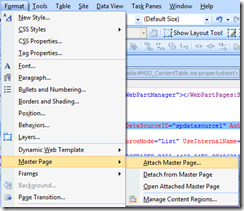

With that out of the way, here’s how to add a Web Part Zone: Click Insert > SharePoint Controls > Web Part Zone.

Now onto the meat and potatoes…

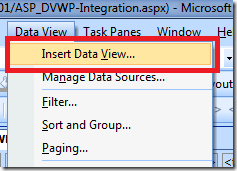

What I like to do is click on that Zone and insert my Web Parts. After all that is what it says to do, right? Doing so, makes sure you are adding the DVWP to the correct location. Here’s the steps to add the Edit Form to the page: Click Data View > Insert Data View…

Find your Shared Documents Library on the right within the Data Source Library Pane. Click on the drop-down menu and select Show Data.

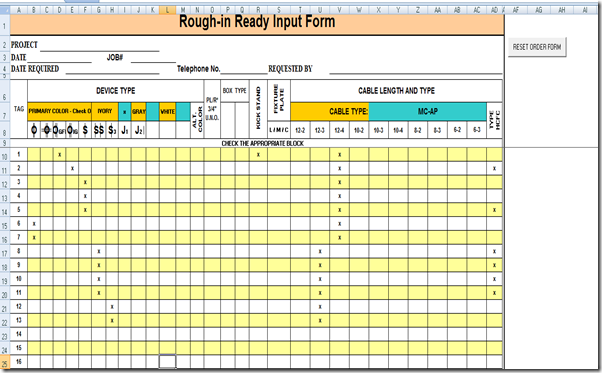

This will display all of the columns within your Library. Within my library, I have a custom column already setup. I’ve called it DocCategory. I’m going to select every column (metadata), I’d like to have available for editing on my page. After doing that, you’ll want to click: Insert Fields As > Single Item Form.

I only have two columns, but that’s all that I need for this example. Feel free to have 10, if you want! The more columns you bring to the page, the more columns you’ll be able to edit.

But it still doesn’t do anything!!!

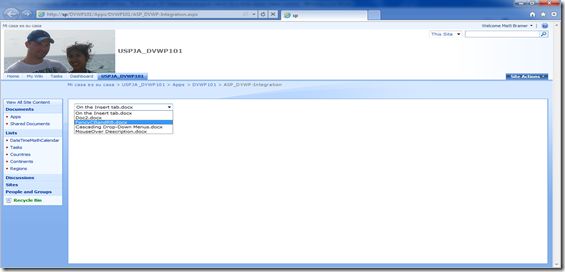

I know, I know… Stick with me and I’m sure you’ll find something useful. So far, we’ve added our ASP.net drop-down list, a Web Part Zone, and a DVWP Edit Form. Here’s a screenshot of where we should be at this point:

The next few articles, I’ll show you how to filter this Web Part using the ASP.net control and create a more dynamic Edit Form. If you have a question or suggestion, feel free to post a comment. I’ve already came up with a few myself. Please don’t be shy because you don’t understand the title. I love screenshots and am a visual learner. I promise you will get plenty of screenshots along the way. If you need more, maybe I’ll do a video, just let me know if something isn’t making sense.